|

|

Research

Multimodal classification of the focus of attention

Abstract

In the German SmartWeb project, the user was interacting with

the web via a Smartphone to get information on, for example,

points of interest. To overcome the tedious use of devices such

as push-to-talk, but still to be able to tell whether the user is

addressing the system or talking to herself or to a third person,

we developed a module that monitors speech and video in parallel.

Our database has been

recorded in a real-life setting, indoors as well as outdoors, with

unfavourable acoustic and light conditions. With acoustic features,

we classify up to 4 different types of addressing (talking

to the system: On-Talk, reading from the display: Read Off-

Talk, paraphrasing information presented on the screen: Paraphrasing

Off-Talk, talking to a third person or to oneself: Spontaneous

Off-Talk). With the camera of the Smartphone, we

record the user's face and decide whether he is looking onto

the phone or somewhere else. We use three different types of turn

features based on classification scores of frame-based face detection

and word-based analysis: 13 acoustic-prosodic features,

18 linguistic features, and 9 video features. The classification

rate for acoustics only is up to 62 % for the four-class problem,

and up to 77 % for the most important two-class problem "user

is focussing on interaction with the system or not". For video

only, it is 45 % and 71 %, respectively. By combining the two

modalities, and using linguistic information in addition, classification

performance for the two-class problem rises up

to 85 %.

Investigated problem

Classification, whether the user of a smartphone communicates with the system by speech input or not.

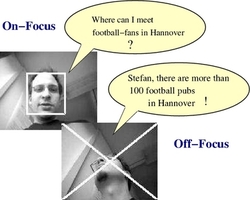

The image shows the user from the perspective of a smartphone cam:

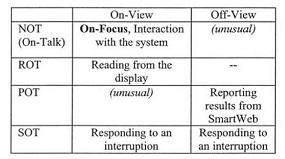

Classification of On-View/Off-View, ROT (read off-talk), POT (paraphrasing off-talk), SOT (spontaneous off-talk), and NOT (no off-talk = on-talk):

Classification

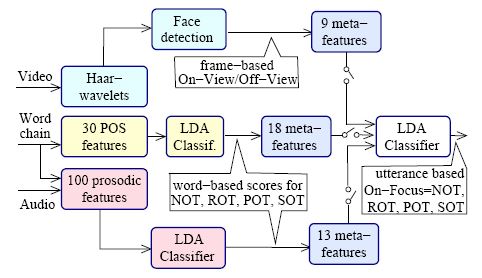

For automatic classification of the focus of attention we used Haar-Wavelets/Adaboost for On-View/Off-View detection and prosodic features

for On-Talk/ROT/POT/SOT classification. Linguistic features (POS = part of speech, e.g. content word follows) are used in the third classification task. Fusion of the modalities was based on meta features describing each sub-system in low dimensional featre space:

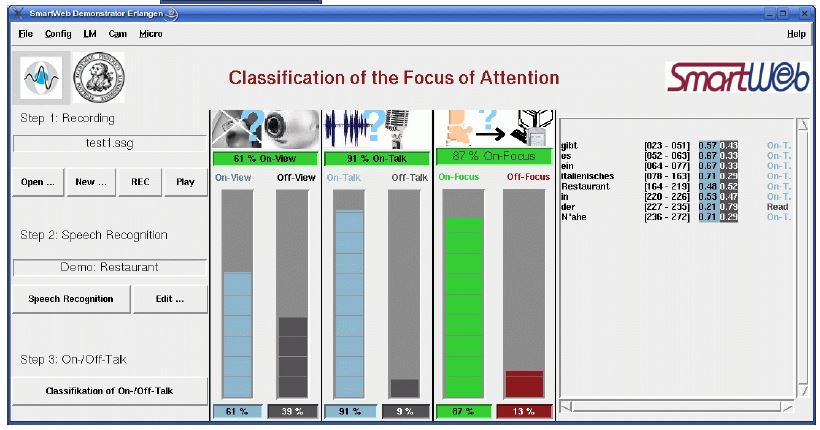

Demonstrator

The demonstrator allows step by step speech recording, recognition, and classification (left). In the right part, whe word based recognition results are shown together with On-Talk scores. In the middle the sentence based scores for On-Talk/Off-Talk, On-View/Off-View, and after fusion (On-Focus/Off-Focus) are shown.

Own Publications

all publications

Batliner, Anton; Hacker, Christian; Nöth, Elmar

To Talk or not to Talk with a Computer - Taking into Account the User's Focus

of Attention

Journal on Multimodal User Interfaces, vol. 2, no. 28, pp. 171-186, 2008

Batliner, Anton; Hacker, Christian; Kaiser, Moritz; Mögele, Hannes; Nöth, Elmar

Taking

into Account the User's Focus of Attention with the Help of

Audio-Visual Information: Towards less Artificial

Human-Machine-Communication In: Krahmer, Emiel; Swerts, Marc;

Vroomen, Jean (Eds.) AVSP 2007 (International Conference on

Auditory-Visual Speech

Processing 2007 Hilvarenbeek 31.08.-03.09.2007) 2007, pp. 51-56

[download poster]

Hacker, Christian; Batliner, Anton; Nöth, Elmar

Are

You Looking at Me, are You Talking with Me -- Multimodal Classification

of the Focus of Attention In: Sojka, P.; Kopecek, I.; Pala,

K. (Eds.) Text, Speech and Dialogue. 9th International Conference, TSD

2006,

Brno, Czech Republic, September 2006, Proceedings (9th International

Conference, TSD 2006 Brno 11-15.9.2006) Berlin, Heidelberg : Springer

2006, pp. 581 -- 588 - ISBN 978-3-540-39090-9

Nöth, Elmar; Hacker, Christian; Batliner, Anton

Does Multimodality Really Help? The Classification of

Emotion and of On/Off-Focus in Multimodal Dialogues - Two Case Studies.

In: Grgic, Mislav; Grgic, Sonja (Eds.) Proceedings Elmar-2007

(Elmar-2007 Zadar 12.-14.09.) Zadar : Croatian

Society Electronics in Marine - ELMAR 2007, pp. 9-16 - ISBN

978-953-7044-05-3

Batliner, Anton; Hacker, Christian; Nöth, Elmar

To

Talk or not to Talk with a Computer: On-Talk vs. Off-Talk In:

Fischer, Kerstin (Eds.) How People Talk to Computers, Robots, and Other

Artificial

Communication Partners (How People Talk to Computers, Robots, and Other

Artificial Communication Partners Bremen April 21-23, 2006) 2006, pp.

79-100

Maier, Andreas; Hacker, Christian; Steidl, Stefan; Nöth, Elmar; Niemann, Heinrich

Robust Parallel Speech Recognition in Multiple

Energy Bands

In: Kropatsch, Walter G.; Sablatnig, Robert; Hanbury, Allan (Eds.)

Pattern Recognition, 27th DAGM Synopsium, Vienna, Austria,

August/September 2005, Proceedings (27th DAGM Synopsium, Vienna,

Austria, August/September 2005 Wien 30. August - 2. September) Vol. 1

Berlin : Springer 2005, pp. 133-140 - ISBN 3-540-28703-5

|